One thing many mathematically-inclined programmers run into when implementing mathematical algorithms, particularly number-theoretic ones, is that the modulo operation doesn’t behave how they expect or prefer.

In many languages, this operator is denoted %.

Concretely, one might prefer that, if the second argument is positive,

then the modulo operation would always give a nonnegative result. Under

this behavior, the expression (-5) % 3 would evaluate to

1 rather than -2. This is a lot more useful

for number theory because then for positive integers n, the

% n operation actually maps integers to exactly the

n canonical representatives for the residue classes. As a

result, \(a \equiv b \mod n\) if and

only if a % n == b % n. You can also do things like index

into a length-n array with a % n and know that

the index will be in-bounds. Finally, there are also optimization

opportunities: modding by a power of 2 becomes equivalent to a simple

bitwise AND, which is really fast on modern computers.

A few programming languages, notably Python, do implement

% this way. However, the majority of languages today,

including pretty much everything remotely descended from C, do not;

instead, (-5) % 3 is -2. This post attempts to

track down why.

The first thing to note is that there is a more important number-theoretic identity we’d like to have:

\[\texttt{a} = (\texttt{a / b}) \cdot \texttt{b} + (\texttt{a \% b}). \tag{1}\]

In words, the integer division and modulo operators should give a

breakdown of a into a sum of some copies of b

plus a remainder. Note that this equation also implies that specifying

the rounding behavior of division is equivalent to specifying the sign

behavior of the modulo operation, which will come up repeatedly

later.

It’s also very uncontroversial that that remainder should have no

copies of b, positive or negative, left over, which gives

the constraint:

\[|\texttt{a \% b}| < |\texttt{b}|. \tag{2}\]

Every programming language I can think of satisfies these two

constraints.1 So far so good. However, these two

constraints don’t uniquely determine the values of a % b

when a isn’t divisible by b; there are two

possible values for a % b, one positive and one negative.

Concretely, we could express \(-5\) as

either \((-1) \cdot 3 + (-2)\) or \((-2) \cdot 3 + 1\), so

(-5) % 3 could be -2 or 1.

It’s still mostly uncontroversial that, if a and

b are both positive, then a % b should be

nonnegative as well; we could call this constraint (3).2

However, if a is negative and b is positive,

programming languages start to diverge in their behavior. Why?

Negative Dividend

As mentioned, in modern C, (-5) % 3 is -2.

In general, the result of a modulo operation in C has the sign

of the dividend. (Just to make our terminology explicit: when

we divide or mod a by b, a is

called the dividend, b is called the

divisor, the result of the division is the

quotient, and the result of the modulo operation is

called the remainder.) It makes a lot of sense that

programming languages in the lineage of C would copy the behavior of C’s

%, simply to maintain backwards compatibility.

In fact, it makes sense even for C itself, which is low-level enough to be fairly beholden to the instruction set being targeted. I think it is a strong design constraint that the syntactically simplest way of performing a modulo operation should compile to a single instruction, if such an instruction is available; and, on modern computer architectures, it is. Just as the most front-of-mind example, the documentation for the x86 IDIV instruction says:

Non-integral results are truncated (chopped) towards 0. The remainder is always less than the divisor in magnitude.

You can verify for yourself that under (1), division truncating towards zero corresponds to a remainder with the sign of the dividend; so if the dividend is nonpositive, the remainder will also be nonpositive.

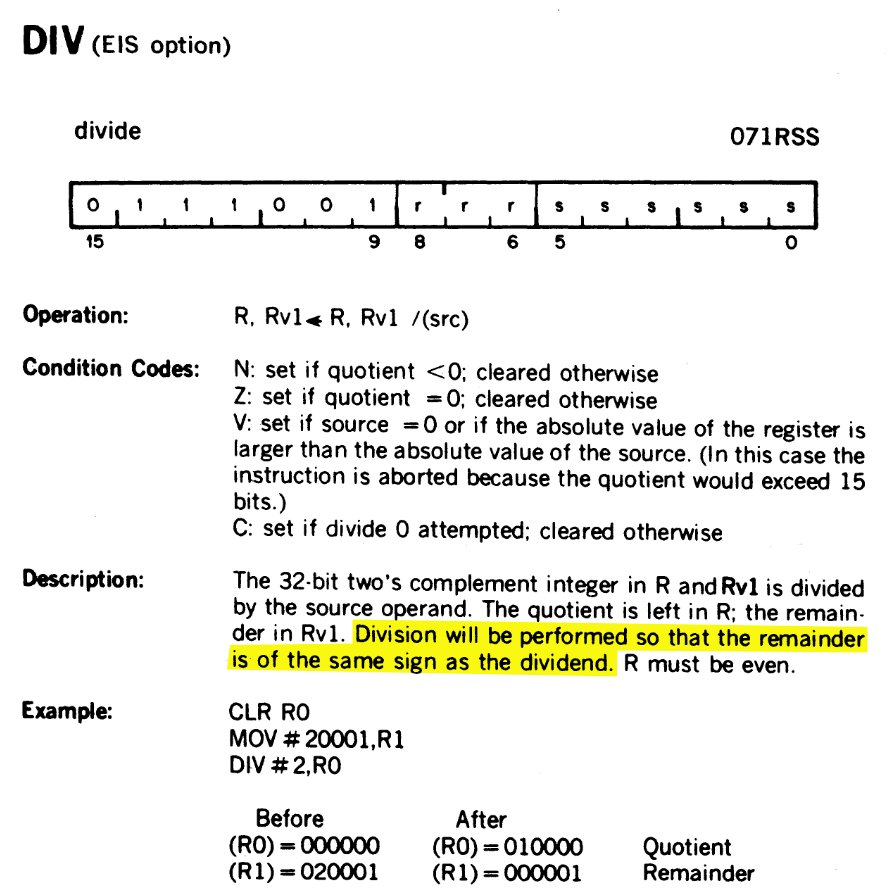

At least, that was my initial reaction, until I realized that C (1972) predates x86 (1978) by quite a few years. C was designed in tandem with the development of Unix for the PDP-11. Fortunately, you can find the PDP-11 manual (PDF) online, and in it, the documentation for its DIV instruction:

Division will be performed so that the remainder is of the same sign as the dividend.

So that provides one layer of justification, but we’re kind of just kicking the can down the road: why did the PDP-11 perform division in this way?

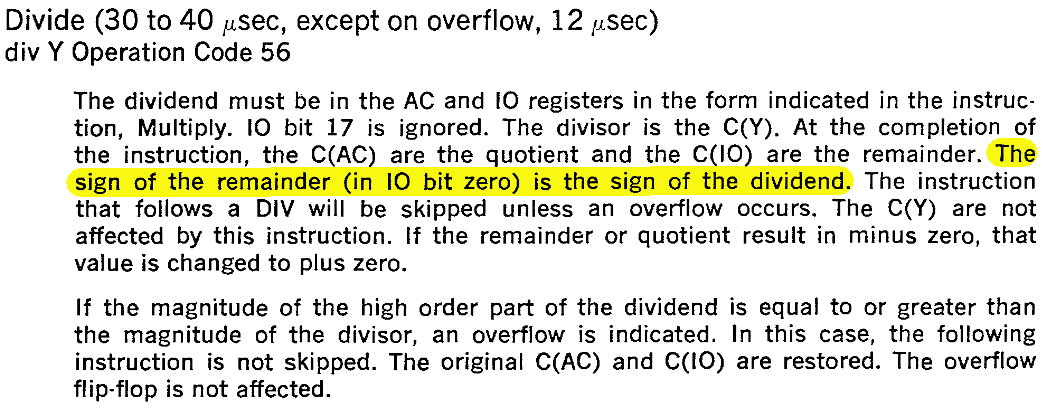

Well, as its name might suggest, the PDP-11 had its own backwards compatibility issues to worry about. If we jump back to the PDP-1 manual, we can find:

The sign of the remainder (in IO bit zero) is the sign of the dividend.

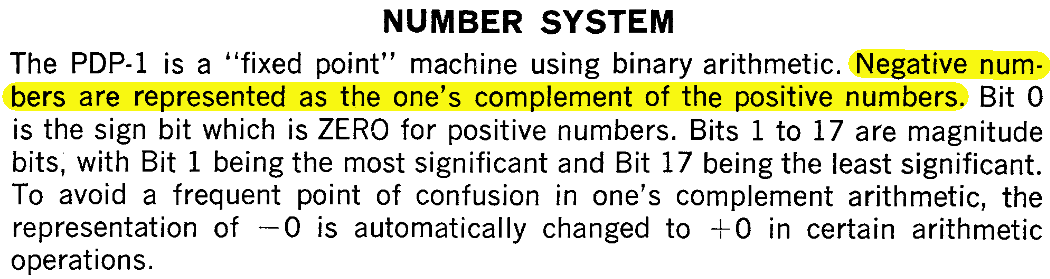

However, this time the choice of sign has a more fundamental justification, which is that the PDP-1 used a one’s-complement representation3 of its numbers.

Negative numbers are represented as the one’s complement of the positive numbers.

One’s-complement has fallen out of favor in modern processors because handling overflow/carrying in addition/subtraction is worse, as is having distinct positive and negative zeroes. Nevertheless, if you want to perform a modulo operation, the sign-of-dividend remainder is easier to compute than the nonnegative remainder. Regardless of the dividend’s sign, you can divide the dividend’s magnitude by the divisor, and simply flip the signs of the remainder (and the quotient) afterwards if the dividend was negative. So this is one justification for why the PDP series and C all produce remainders matching the sign of the dividend.

In fact, there’s more. It might have been that this choice of sign was the behavior in practice, but C89 deliberately did not specify the rounding behavior, as the spec4 says (emphasis mine):

When integers are divided and the division is inexact, if both operands are positive the result of the

/operator is the largest integer less than the algebraic quotient and the result of the%operator is positive. If either operand is negative, whether the result of the/operator is the largest integer less than the algebraic quotient or the smallest integer greater than the algebraic quotient is implementation-defined, as is the sign of the result of the%operator. If the quotienta/bis representable, the expression(a/b)*b + a%bshall equal a.

The spec requires that the division and modulo operations satisfy

constraints (1), (2), and (3), but deliberately does not specify the

sign of the remainder if it’s still ambiguous, presumably so different

hardware with different division/modulo semantics can still implement

% in a single instruction. However, if you skip a few years

ahead, the C99 specification does specify the sign of the

remainder, and §6.5.5 of the

C99 rationale explains that this was for compatibility with (wait

for it) Fortran:

In C89, division of integers involving negative operands could round upward or downward in an implementation-defined manner; the intent was to avoid incurring overhead in run-time code to check for special cases and enforce specific behavior. In Fortran, however, the result will always truncate toward zero, and the overhead seems to be acceptable to the numeric programming community. Therefore, C99 now requires similar behavior, which should facilitate porting of code from Fortran to C.

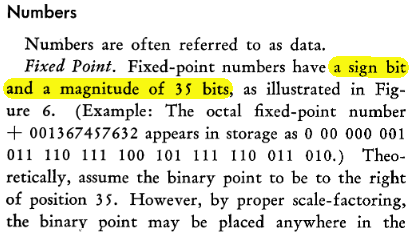

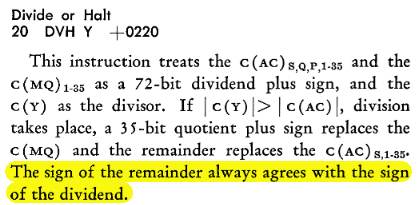

Fortran, in turn, was initially designed for the IBM 704, which used the even harder-to-use sign-magnitude representation for its numbers. The IBM 704 manual:

Fixed-point numbers have a sign bit and a magnitude of 35 bits […]

And, to nobody’s surprise, the documentation for the IBM 704’s division instruction says:

The sign of the remainder always agrees with the sign of the dividend.

Due to its nice sign symmetry, the advantage of a sign-of-dividend modulo result is even starker if you’re working with sign-magnitude numbers.

So, in a nutshell, the modulo operations of C and languages descended from C are influenced by backwards compatibility from the days when computer scientists and engineers hadn’t settled on two’s complement as a number representation.5

I should add, though, that although I think this backwards

compatibility is the strongest reason we have sign-of-dividend remainder

today, it’s not the only possible reason. Sign-of-dividend remainder

also has advantages when applied to floating-point numbers: for example,

in today’s standard double-precision floating-point numbers,

-1e-18 % 1 is just -1e-18 if the remainder has

the sign of the dividend, but loses precision if you want the positive

remainder because the number 1 - 1e-18 gets rounded to

1.6 And of course, if your language has

an % operator that works on both integers and on

floating-point numbers, it’s probably desirable for its mathematical

behavior on both to be consistent.

Also, there are a fair number of programming languages that provide

both types of modulo operation we’ve seen so far, e.g. Ada, Clojure,

Common Lisp, and Haskell. They often differentiate the two operations by

calling the variant producing a sign-of-dividend remainder “remainder”

or rem, and the variant matching Python’s behavior “modulo”

or mod. (A more atypical example is CoffeeScript, which

inherits JavaScript’s remainder operation % and defines a

modulo operation with the symbol %%.) Wikipedia has a more

complete list of programming languages by their behavior.

Up to now, though, I’ve been tiptoeing around another case for the modulo operation: What if the divisor is negative?

Negative Divisor

I think using the modulo operation with a negative divisor is much rarer in practice: usually, either the divisor is a fixed positive constant7, or it arises from a context where it’s definitely positive, such as being an array length.

Still, it turns out that in most languages, if the modulo operation

doesn’t take the sign of the dividend, or if there’s a mod

operator/function separate from the rem function, it

usually computes a result with the same sign as the

divisor. So, for example, in Python, 5 % -3 is

-1.

This behavior might seem a little strange or mathematically undesirable at first, but the justifications I mentioned at the start of this post aren’t so strong any more. Instead, if you plug sign-of-divisor remainder into equation (1), you can work out that this corresponds to the mathematically neat definition of integer division that always rounds down, or floors: \[\texttt{n / a} = \left\lfloor\frac{\texttt{n}}{\texttt{a}}\right\rfloor.\]

In light of this observation, it’s worth checking more completely if the claim that sign-of-dividend remainder is easier to compute in one’s-complement or sign-magnitude numbers still holds up. It does: the sign symmetry is still better. With sign-of-dividend remainder, we have the nice identities \[(-n)\;\texttt{rem}\;a = -(n\;\texttt{rem}\;a)\] and \[n\;\texttt{rem}\;a = n\;\texttt{rem}\;(-a).\] That is, negating the dividend negates the result, and negating the divisor doesn’t affect it. So, we can drop the signs from both dividend and divisor, compute the remainder, and negate the result iff the dividend started out negative.

With sign-of-divisor modulus, we don’t have nice identities like this. The best we can do is \[(-n)\;\texttt{mod}\;(-a) = -(n\;\texttt{mod}\;a):\] negating both dividend and divisor negates the result. There isn’t a neat identity spanning the other direction because their arithmetic relation depends on if the result is zero.

One clearer way to see the affinity between sign-of-dividend remainder and one’s-complement/sign-magnitude numbers is to think about the optimization cases. In either representation, dividing or modding a number by a fixed power of two is easier with truncating division and sign-of-dividend remainder; division is a bit right shift and modding is just taking the last few bits (both with sign extension if one’s-complement). Of course, as mentioned at the start of this post, the same affinity links flooring division and sign-of-divisor remainder to two’s-complement. Flooring division by a power of two is a bit right shift in two’s-complement (again, with sign extension if needed); modding by a power of two is just taking the last few bits.

Now, however, we’re left with a rectangle to complete. Which kind of division and modulo is easier to implement, fully generally, in today’s hardware, with today’s two’s-complement numbers and algorithmic understanding?

I wasn’t able to find any resources online examining this question exactly — nearly everybody either only gives an algorithm for dividing/modding two nonnegative integers, or just says to remove the signs from both operands and fix the result at the end — but based on my limited understanding of hardware and division algorithms, I think the rectangle completes as expected: in two’s-complement, sign-of-divisor has a slight advantage.

Division Algorithms and Sign

Wikipedia has pretty nice descriptions of the “slow” division algorithms used to do division and modulo in hardware. Here, though, I’ll try to present these algorithms with pseudocode that’s even less efficient but even conceptually simpler than what Wikipedia has.

First, “restoring division”:

r = dividend

q = 0

for i from ((bit width of r) - 1) down to 0:

# the following shift must use a wider integer to not overflow

# (and in practice, divisor will be shifted left at the start

# and shifted right once each loop)

new_r = r - (divisor << i)

if r >= 0:

q += (1 << i)

r = new_rBasically, for each power-of-two multiple of the divisor starting from the largest, you try to subtract it from the dividend; if the difference is nonnegative, you write down 1 in your quotient and set the new dividend to that difference; otherwise, you write down 0 in your quotient and keep the dividend unchanged. The division is called “restoring” because you can equivalently think of it as always subtracting the divisor’s multiple from the dividend, but then backtracking and “restoring” the old dividend if you got a negative result.

This algorithm assumes that the dividend and divisor are both nonnegative. There isn’t a nice way to make it sign-agnostic, so I think it favors sign-of-dividend remainder. However, it is not the best hardware division algorithm in practice.

Consider non-restoring division:

r = dividend

q = 0

for i from ((bit width of r) - 1) down to 0:

# Same caveats as above: shift must use wider integer,

# divisor wouldn't be shifted dynamically.

# Also, instead of adding numbers to q, we'd store the sign of each

# addition as individual bits and convert it to a two's-complement number

# in a single step at the end.

if r >= 0:

q += (1 << i)

r -= (divisor << i)

else:

q -= (1 << i)

r += (divisor << i)Whereas restoring division tries to subtract multiples of the divisor

and then undoes its work if the answer is negative, nonrestoring

division unapologetically subtracts or adds multiples of the divisor

depending on the sign of the dividend to try to send it closer to 0.

This fun algorithm produces a q in the form of a binary

representation with digits from the set \(\{+1, -1\}\); fortunately, this is not hard

to convert back to a normal binary number. It also produces a remainder

r in the range -divisor <= r < divisor,

whose sign is not terribly meaningful.8

Nonrestoring division works because after adding or subtracting

divisor << i, the value of n is in the

range

-(divisor << i) <= n < (divisor << i)

(check this). It’s kind of funny because if you do an easy division like

divide 5 by 3 as a pair of 32-bit integers, the first thing it will do

is compute the very small number \(5 - 3 \cdot

2^{31}\), stick that in r, and then spend literally

all of its remaining iterations correcting back up towards 0, which it

just manages to do. But it works, and in fact, it works equally well for

positive and negative dividends.

Nonrestoring division doesn’t work, however, with a negative divisor.

So it makes sense to check if the divisor is negative, remove the sign,

run the algorithm, and then use the initial sign of the divisor to guide

the final sign correction if necessary. This makes nonrestoring division

a good fit for computing a sign-of-divisor remainder. Also, the rest of

the algorithm is quite a good fit for two’s-complement arithmetic.

Addition, subtraction, and shifting are all easy. Checking if

n >= 0 is the easiest nontrivial comparison to do in

two’s-complement; simply check if the most significant bit of

n is unset. And if the \(\{+1,

-1\}\) “bits” of q are stored as 1 and

0 in q, you can recover a normal

two’s-complement number simply as q - ~q, which equals

2*q + 1 and actually requires no bit operations.

What’s more, it does appear to be more efficient in hardware in terms of delay. Wikipedia states this fact with a citation to a Stanford handout that doesn’t appear to demonstrate it at all (??), but, using my basic understanding of hardware,9 I will nevertheless attempt to defend this claim on my own. Since both division algorithms have equally long loops, the delay in each loop iteration is likely the most important factor; and the key difference is that the branch condition can be computed sooner in nonrestoring division.

- In one iteration of restoring division, you need to compute

new_r = r - (divisor << i), and then mux on the MSB ofnew_rto choose betweennew_randr. Crucially, these two things must happen in series, and using one bit to mux between two many-bit integers costs quite a bit of delay, because you need to add buffers to that one bit to give it enough strength to drive all the muxes. So the critical path through one iteration is something like that of one adder plus a bunch of buffers. - In one iteration of nonrestoring division, you can compute

r - (divisor << i)andr + (divisor << i)and add buffers to the MSB ofr, all in parallel. Then you can use the buffered MSB to mux between the difference and the sum. The two adders probably have longer delay than the buffer tree, so the critical path is just a little more than one adder, which is nontrivially shorter than the delay of one iteration of restoring division.

So, using nonrestoring division, sign-of-divisor modulus seems easier or more efficient to implement in hardware than sign-of-dividend remainder. Wikipedia continues on to describe SRT division, which is even faster, but it appears to share the property of nonrestoring division that the dividend bounces around positive and negative values, making the algorithm work equally well on positive and negative initial dividends. All in all, this increases my confidence that backwards compatibility is the main reason that sign-of-dividend remainder is popular today.

Some Other Possible Sign Behaviors

For completeness, we’ll mention some other options for implementing

division and modulo that are neither of the above two. For one, you

could say that the remainder should always be nonnegative. A

few languages take this option, e.g. Scheme.

Rust offers this behavior in a

family of methods on primitives, .div_euclid and

.rem_euclid (and other variants); the RFC has a

detailed discussion. This is also a plausible choice, but the

drawback is that there is no simple description of the corresponding

quotient forced by (1): you have to round down if the divisor is

positive and round up if the divisor is negative.

Scheme also offers a fourth option of div0 and

mod0, which, as mentioned in an early footnote, is unusual

for violating (3): it tries to minimize the magnitude of the

remainder,10 which corresponds to rounding

division to the closest integer. This option is suggested for

floating-point numbers in the Python

History blog post, because magnitude-minimization is valuable when

working with floating-point numbers.

A summary of the four options covered:

| Quotient | Remainder | Benefits |

|---|---|---|

| Truncate towards zero | Sign of dividend | Backwards-compatible; more sign symmetry; better preserves floating-point representability |

| Floor (round down) | Sign of divisor | Mathematically nice; better optimization opportunities in two’s-complement |

| Round away from sign of divisor | Always nonnegative | Remainder is mathematically nice |

| Round to closest integer | Minimum magnitude | Magnitude reduction is good for floating-point precision |

Sources

Multiple footnotes link to the Python History blog post Why Python’s integer division floors. That post mentions the core claim of this one, that sign-of-dividend remainder is based on older computers using sign-magnitude number representation, and probably inspired it entirely. It also mentions the floating-point advantage. After more research, though, one of the most complete analyses I found after more research was somewhat unexpectedly Issue #250 on the WebAssembly design repo, which led me to the Fortran connection. A few people have posted hardware division implementations; here’s a StackExchange answer with (massive) hardware diagrams, and somebody’s SystemVerilog implementation. Finally, although this is almost no evidence at all, here’s internet person mentioning PDP-1.